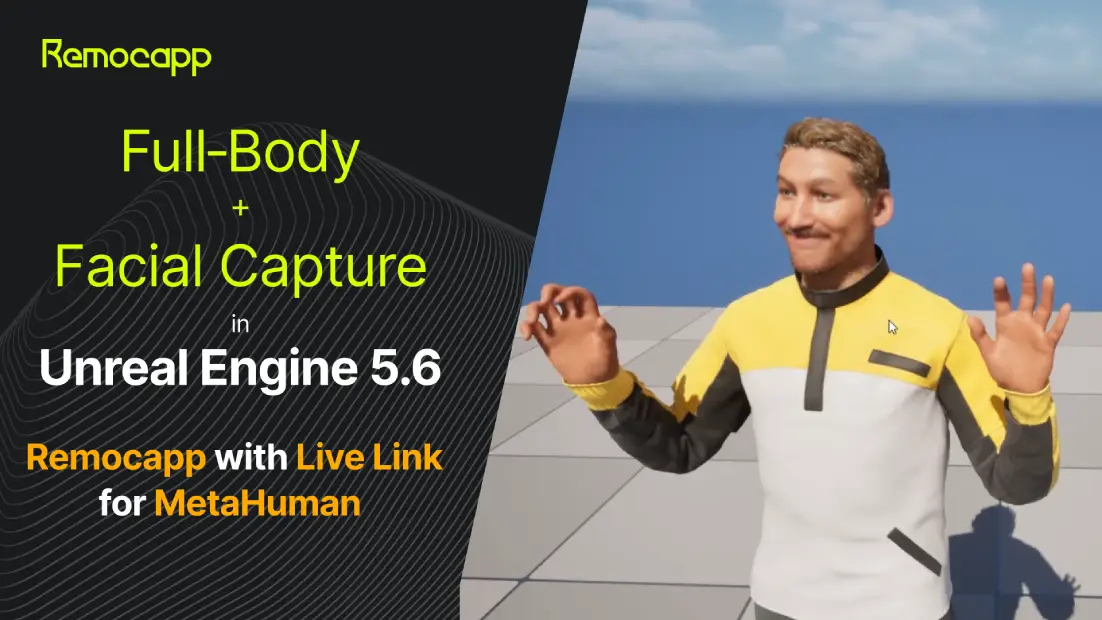

Bring your MetaHumans to life with full-body motion capture from Remocapp and expressive facial animation via Live Link—running together in real time inside Unreal Engine 5.6. It’s ideal for previs, virtual production, cutscenes, livestreams, and indie filmmaking where speed and believability matter. Watch the tutorial below to see what’s achievable and how this blend keeps signals clean: body/head from Remocapp, face from Live Link.

Watch the Tutorial

Watch the tutorial to see how to get started with free motion capture using webcams.

What You Can Achieve

Real-time performance capture

Drive body and head movement with Remocapp while Live Link handles nuanced facial expressions—simultaneously.

Cinematic-quality MetaHuman animation

Create believable performances for previs, virtual production, cutscenes, and livestreams.

Flexible facial sources

Use a webcam, iPhone/iPad (ARKit), or compatible Android/virtual camera for facial tracking.

Clean signal separation

Keep body/head from Remocapp and face from Live Link for stable blending without double-transform issues.

Why This Workflow Works

Division of responsibility

Remocapp excels at robust body tracking; Live Link shines at facial motion capture fidelity. Together, you get both.

Low friction, high iteration speed

Plug-and-play sources, immediate feedback in the UE viewport, easy retakes.

Production-friendly

Consistent subject routing via Live Link and Blueprint properties makes it repeatable on set.

What You Need

Software

Unreal Engine 5.6+, Remocapp plugin, Remocapp Windows motion capture app, MetaHuman(s).

Hardware

Webcam or mobile device (ARKit/compatible Android) for facial capture.

Project setup

A MetaHuman prepared for Remocapp body animation. If not yet configured, start here.

FAQs

You can stream live and/or record takes in UE. The workflow supports both.

Yes. A standard webcam works; ARKit offers additional fidelity if available

No—just route face to Live Link and keep body/head with Remocapp as shown in the video.